Many companies have clear sustainability targets of their own and are subject to requirements from interest groups and legislators to reduce their energy consumption.

At the same time, the energy consumption of IT today is increasing due to the growing computing power for AI and related applications and is therefore becoming an ever greater cost factor. Thus, information technology as a factor in energy consumption and carbon footprint is being critically questioned in many companies this year, with IT equipment, such as servers and cooling systems, being an important factor in reducing the energy consumption of data centers. But how can companies optimize their infrastructure and assume social responsibility for sustainable development? IT infrastructure provider KAYTUS shows in which four areas improvements are necessary and sensible and what should be paid particular attention to.

Positive and negative factors in Europe’s environmental balance sheet

The EU has continuously reduced its greenhouse gas emissions since 1990 and achieved an overall reduction of -32.5% in 2022. Although the amount of carbon removed from the atmosphere in the EU has increased compared to the previous year, the EU is currently not yet on track to meet its 2030 target of removing 310 million more tons of CO2 from the atmosphere. EU Member States need to significantly step up their implementation efforts and accelerate emission reductions in order to reach the target of a net greenhouse gas reduction of -55% by 2030 and climate neutrality by 2050.

Complex AI applications increase energy requirements

With the development of AI applications such as generative AI, machine learning (ML), autonomous driving and many others, the energy requirements of servers and the power density of computer chips and server nodes continue to increase. The power consumption of AI chips has increased from 500 watts to 700 watts and is expected to exceed 1000 watts in the future. As the energy consumption of processors increases, the requirements for the heat dissipation of the entire device also continue to rise. Authorities in the EU and worldwide have set high requirements for energy saving and consumption reduction for more environmentally friendly data centers. The EU’s latest energy efficiency directive stipulates that data centers with an IT power consumption of over 100 kilowatts must publicly report on their energy efficiency every year.

By 2030, data centers are expected to account for 3.2 percent of total electricity demand in the EU, an increase of 18.5 percent compared to 2018. In order to achieve the environmental targets set, companies should therefore take their responsibility for sustainable development seriously. As computer usage increases, optimizing IT infrastructure has become an important measure for them to reduce energy consumption and develop sustainably.

Four important approaches for a greener data center

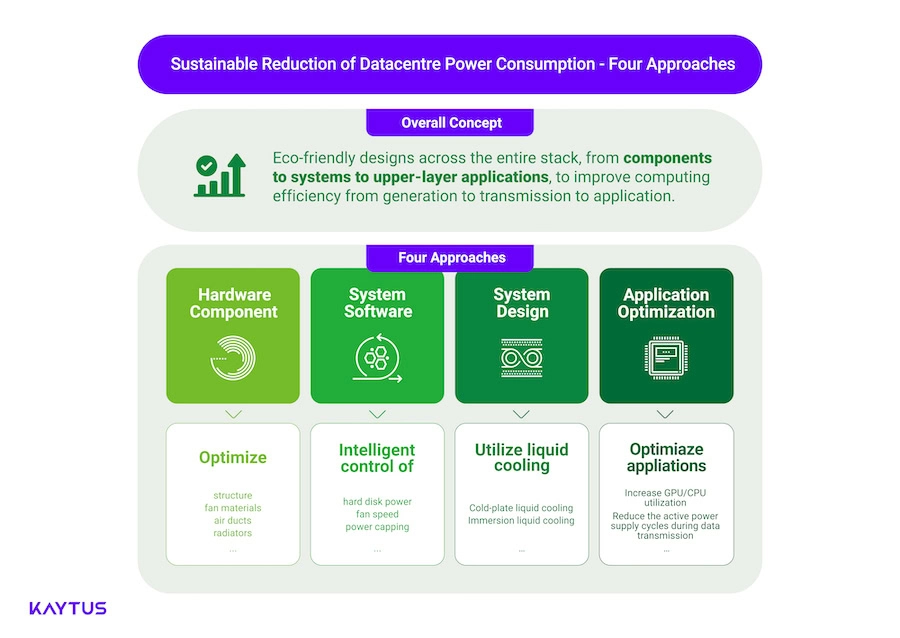

But how can companies minimize the power consumption of data centers? Green computing optimization should focus on the following factors: Hardware design, software strategy, system-level refinements and application optimization, all aimed at increasing energy efficiency. Let’s look at the optimization approaches in detail.

Starting points for hardware components

At the hardware component level, optimizing the structural design of components such as fans, air ducts and radiators can help to improve the efficiency of heat dissipation. For example, improved front and rear inlets and fans can ensure an increased and more even airflow of up to 15 percent and thus maximize cooling performance. IT infrastructure providers are now using simulation experiments to improve the shape, spacing and angle of attack of the fan blades and reduce vibration. Furthermore, the motor efficiency, internal structure and materials of the fans can be optimized to maximize the airflow volume and reduce energy consumption.

Air ducts with low flow resistance make the air flow more stable and efficient. A horizontal design and a honeycomb-shaped air opening with a wave profile are recommended to effectively interrupt internal turbulence, which can increase heat dissipation efficiency by more than 30 percent.

IT and data center managers are advised to develop several different cooling solutions to improve the heat dissipation of high-performance processors. Through special heat sinks and various techniques such as standard heat dissipation, T-shaped heat dissipation, siphon heat dissipation, cold plate heat dissipation, etc., the heat dissipation efficiency of the entire server system can be increased by more than 24 percent, the power consumption of a single node server can be reduced by 10 percent, and the cooling requirements of a 1U two-socket server with a single 350W processor can be met.

Starting points for software components

At the software component level, energy-saving measures such as individual energy-based control of individual hard disks, intelligent speed adjustment and power consumption limitation can be implemented, which can reduce the overall power consumption of the server by more than 15 percent.

IT specialists can automatically adapt the power control of individual hard disks to the heat dissipation strategy, control the switching on and off of individual hard disks via CPLD, limit the system throughput to some hard disks and put other hard disks into sleep mode. This can save around 70 percent of power consumption. Adjusting the heat dissipation strategy helps to reduce the fan speed and lower the power consumption of the data center’s cooling units. Compared to conventional server structures, power consumption can be reduced by 315 percent per TB, saving 40 percent of data center space and reducing total operating costs by more than 30 percent.

Built-in sensors help to collect temperature information in real time at various points on a server. Based on decentralized intelligent control technology and the collected and evaluated data, the fan speed is adjusted in different air ducts to achieve energy-saving fan speed control and precise air supply.

Further starting points for IT systems

In order to improve the efficiency of computing power usage, reduce equipment idle time and thus realize lower energy consumption, IT and data center experts are adapting their entire IT system to the respective circumstances and relying on a mixed air-liquid or pure liquid cooling design for heat dissipation, especially for high-density servers, AI servers and rack-scale servers, reducing the PUE (Power Usage Effectiveness) value to below 1.2.

Cold plate liquid cooling is particularly effective here, as it is especially suitable for components with high power consumption such as processors and memory, which account for more than 80 percent of a data center’s power consumption. The liquid cooling module is also ideally compatible with a wide range of common cooling connections, effectively reducing the power consumption of the entire server and lowering deployment costs.

For example, cold plate liquid cooling can meet the cooling needs of a 1000W chip and support 100kW of heat exchange in a single server rack. Such a liquid-cooled server rack often contains dynamic monitoring devices that enable intelligent control of the IT environment and use node-level liquid leak detection technology to provide real-time alarms. Such a liquid-cooled rack system is characterized in part by excellent energy efficiency: It offers higher computing density, 50 percent higher heat dissipation efficiency and 40 percent lower power consumption compared to conventional air cooling.

In addition, liquid-cooled servers also support cooling liquids with high temperatures, e.g. 45°C (113°F), at the inlet. The high temperature of the liquid flowing back into the system enables better utilization of free-cooling technology, which significantly reduces energy consumption. When liquid-cooled systems are designed to operate efficiently even at high ambient temperatures such as 45°C (113°F), energy consumption in data center operations can be further reduced.

Starting points for optimizing end applications

Finally, the resource consumption of the end applications also plays a decisive role in green computing, and here too it is important to optimize the computing load of the applications.

To do this, IT professionals should optimize their server workloads to increase GPU/CPU utilization and enable consolidation on fewer servers. IT professionals can use strategies for pooling compute power and fine-grained allocation of compute resources to maximize GPU utilization. This can range from multiple instances on a single board to large-scale parallel computing on multiple machines and boards. This could increase the utilization of cluster computing power to over 70%.

Another strategy for conserving resources is asynchronous polling, which minimizes the active cycles of battery-operated devices during intermittent data transmissions. This minimizes the active communication time and reduces the total power consumption compared to continuous polling at fixed intervals.

Conclusion

Although there are numerous starting points for green computing, one particularly important aspect is to continuously improve the data center structure in order to increase the efficiency of computing power from generation to transmission to application. The main challenge for system manufacturers is to test and improve the design of their system architecture, power optimization and heat dissipation. On the one hand, they can minimize the energy requirements of the computers and the emissions caused by power consumption. On the other hand, the computing power generated can be used as much as possible at the application level to reduce the waste of computing resources. Companies can therefore reduce costs and fulfill their responsibility for sustainable development by prioritizing IT infrastructures that meet both their operational efficiency requirements and CO2 emission regulations.