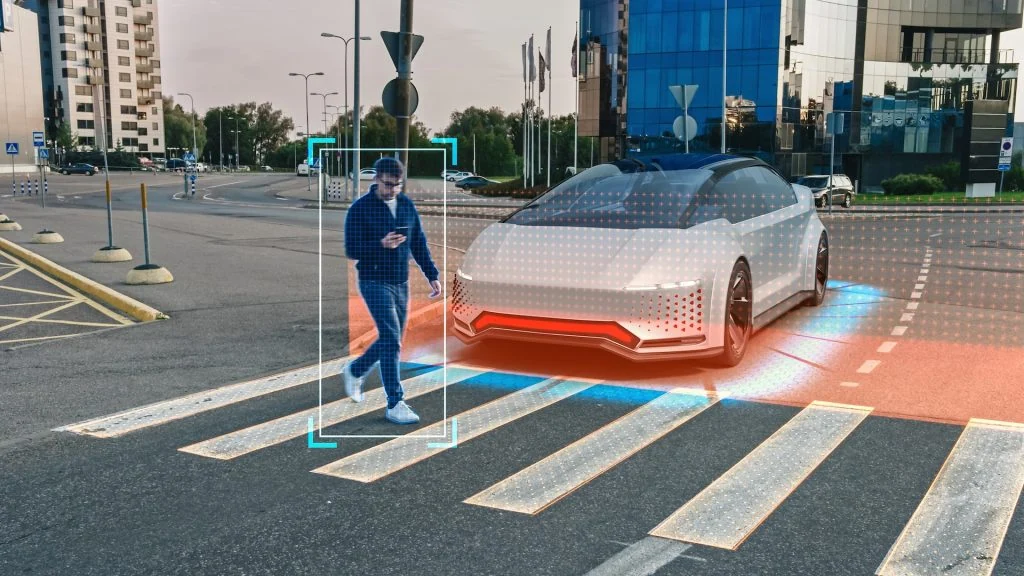

AI systems dominate the automotive industry, but the road to autonomous driving is faltering. Weaknesses such as a lack of transparency and robustness are slowing the technology down. Experts see “responsible AI” as the solution.

AI is revolutionizing the automotive industry – but not everywhere

The automotive industry has been using AI for a long time, for example to make production more efficient or to increase safety and comfort. Communication between vehicles and their surroundings ensures a better driving experience. However, autonomous driving, a central dream of the industry, does not seem to be progressing as hoped. Although forecasts have long promised nationwide robotaxis, autonomous driving has so far only become a reality in a few test regions – often with problems. Reports from San Francisco about traffic disruptions and accidents caused by autonomous vehicles have made headlines.

Why the technology doesn’t work

Research institutions such as the German Research Center for Artificial Intelligence (DFKI) emphasize that current AI models are not yet mature enough. Machine learning in particular fails in safety-critical applications: Systems often offer little explanation, are not robust enough and require enormous amounts of data. Without clear explanations of the decision-making processes, trust in the technology remains limited – a major obstacle to its use in road traffic.

Responsible AI: the solution?

To solve this problem, DFKI and Accenture are proposing a new strategy: “Responsible AI”. This responsible AI is intended to ensure that systems are developed and used ethically, transparently and fairly. This is not just about technology, but also about social acceptance. As AI expert Prof. Marco Barenkamp explains, such an approach is essential to strengthen trust in AI and make it safer: “Responsible AI is essential for the success of autonomous driving technologies.”

New approaches: Neuro-explicit AI

One promising approach is neuro-explicit AI. It combines the strengths of neural networks with symbolic reasoning and physical knowledge. The goal: transparent and robust decisions that remain reliable even in critical situations. For example, autonomous systems could better interpret visual data by taking physical properties such as light reflections into account.

A guide to ethical AI

In their guidelines, DFKI and Accenture call for more transparency, fairness and sustainability in AI development. This should ensure that risks such as discrimination or data protection violations are minimized. In the long term, “Responsible AI” could not only help the automotive industry to gain the trust of society, but also make the dream of safe autonomous driving a reality.

Guide from DFKI and Accenture: Responsible AI in the automotive industry: Techniques and use cases

(sp/Academic Society for Artificial Intelligence – Studiengesellschaft für Künstliche Intelligenz e.V.)