Since the discovery of LLMJacking by the Sysdig Threat Research Team (TRT) in May 2024, this threat vector has evolved rapidly. Just as large language models (LLMs) are constantly improving and finding new applications, cybercriminals are also adapting and using new ways to gain unauthorized access to these powerful systems.

The rapid expansion to new LLMs such as DeepSeek is particularly striking.

Back in September 2024, Sysdig TRT reported on the growing popularity of these attacks. The trend has intensified: “We were not surprised that DeepSeek was targeted within days of its media exposure.” Large companies such as Microsoft are also affected – a lawsuit against cyber criminals who misused stolen login data has caused a stir. The defendants are said to have used DALL-E to generate offensive content.

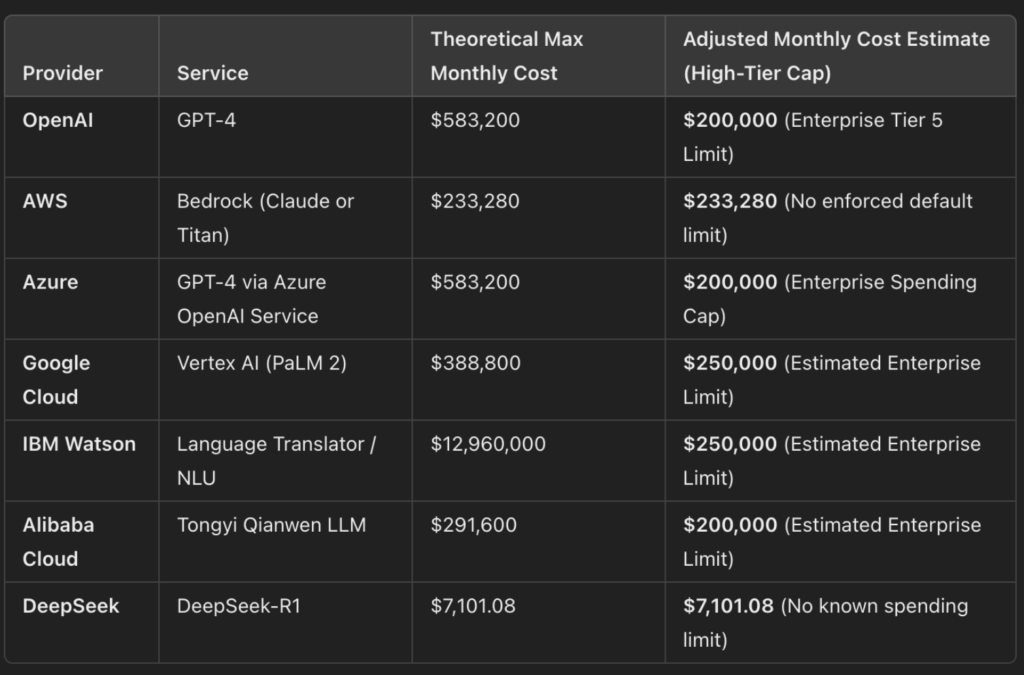

Why LLMJackers prefer to steal rather than pay

Operating LLMs can be costly – often hundreds of thousands of dollars per month. Sysdig TRT discovered over a dozen proxy servers using stolen credentials for various services such as OpenAI, AWS and Azure. The perpetrators rely on a simple principle: why pay when you can steal?

DeepSeek is an example of these rapid takeovers: On December 26, 2024, the DeepSeek-V3 model was released, and just a few days later it was already integrated into an ORP (oai-reverse-proxy) instance on HuggingFace. Things went even faster with DeepSeek-R1: just one day after its release, the model was already usable via a compromised proxy.

New methods and organized communities

LLMjacking is no longer an isolated phenomenon. Online communities have formed in which tools and techniques for abusing LLMs are shared. Platforms such as 4chan and Discord are particularly active. Access data is traded via platforms such as Rentry.co, which prove to be an ideal meeting place for criminals due to their anonymity and simple processing options.

While investigating cloud honeypots, Sysdig TRT discovered several TryCloudflare domains used in combination with ORPs to automate access to LLMs. The modus operandi: steal credentials, test them and resell them via proxies.

The attackers’ tricks: automation and anonymity

The attackers rely on sophisticated scripts to verify stolen credentials. Systems with vulnerabilities in frameworks such as Laravel, which are used to extract credentials, are particularly susceptible. These scripts have some common characteristics:

- Simultaneity: To efficiently test a large number of stolen keys.

- Automation: Minimum effort, maximum profit.

Public software repositories are another rich source of credentials. API keys are often inadvertently published in code repositories such as GitHub, which cybercriminals exploit.

How to protect yourself against LLMJacking

LLMJacking is usually based on stolen credentials or access keys. MITRE has now included this attack vector in its Attack Framework to make the threat visible. Companies and developers should take the following protective measures:

- Secure the access key: Never store API keys permanently in the code. Instead, use environment variables or secret management tools such as AWS Secrets Manager or HashiCorp Vault.

- Use temporary login data: Use solutions such as AWS STS AssumeRole or Azure Managed Identities.

- Rotate keys regularly: Implement automated processes for key rotation.

- Monitor exposed login data: Use tools like GitHub Secret Scanning or TruffleHog.

- Detect unusual activities: Detect suspicious behavior in cloud and AI services using monitoring tools such as Sysdig Secure.

Conclusion: LLMjacking is here to stay

The increasing demand for AI models has created a black market for access to LLMs. Criminal networks are constantly developing new methods to monetize stolen credentials and use LLMs for illegal purposes. As cybercriminals adapt, organizations and users need to continuously improve their security strategies to stay ahead of this threat. The question is not whether LLMjacking will continue – but how we arm ourselves against it.

(vp/Sysdig)